Multimodal Interfaces for Communicating Robot Learning

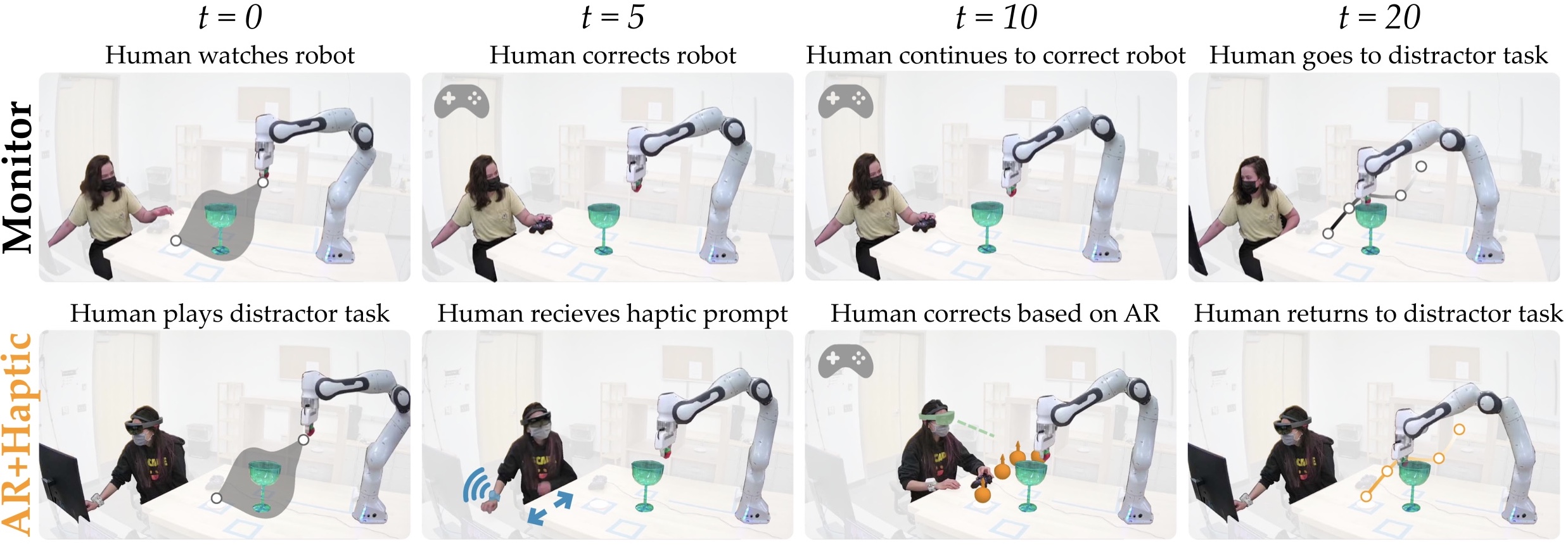

Robots learn as they interact with humans, but how do humans know what the robot has learned and when it needs teaching? We leverage information-rich augmented reality to passively visualize what the robot has inferred, and attention-grabbing haptic wristbands to actively prompt and direct the human’s teaching. We apply our system to shared autonomy tasks where the robot must infer the human’s goal in real-time. Within this context, we integrate passive and active modalities into a single algorithmic framework that determines when and which type of feedback to provide.

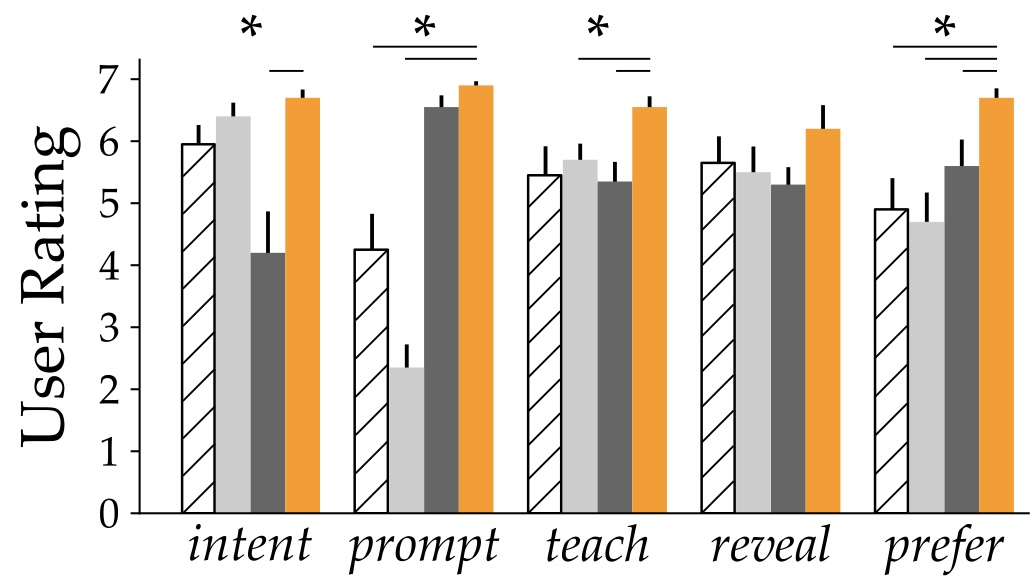

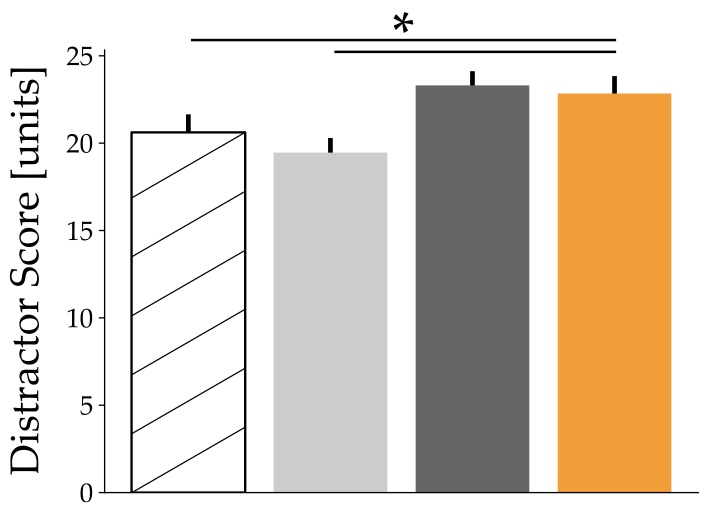

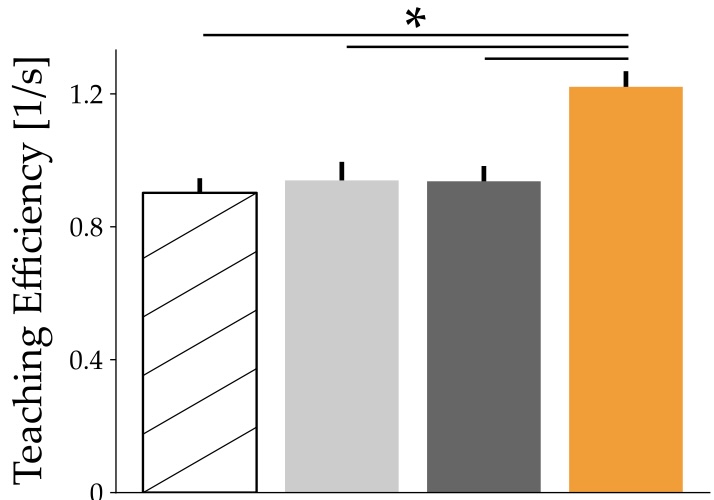

Combining both passive and active feedback experimentally outperforms single modality baselines; during an in-person user study, we demonstrate that our integrated approach increases how efficiently humans teach the robot while simultaneously decreasing the amount of time humans spend interacting with the robot. Videos here.